AI-Powered Scam Assembly Lines: What DFIR Teams Should Hunt and How to Respond

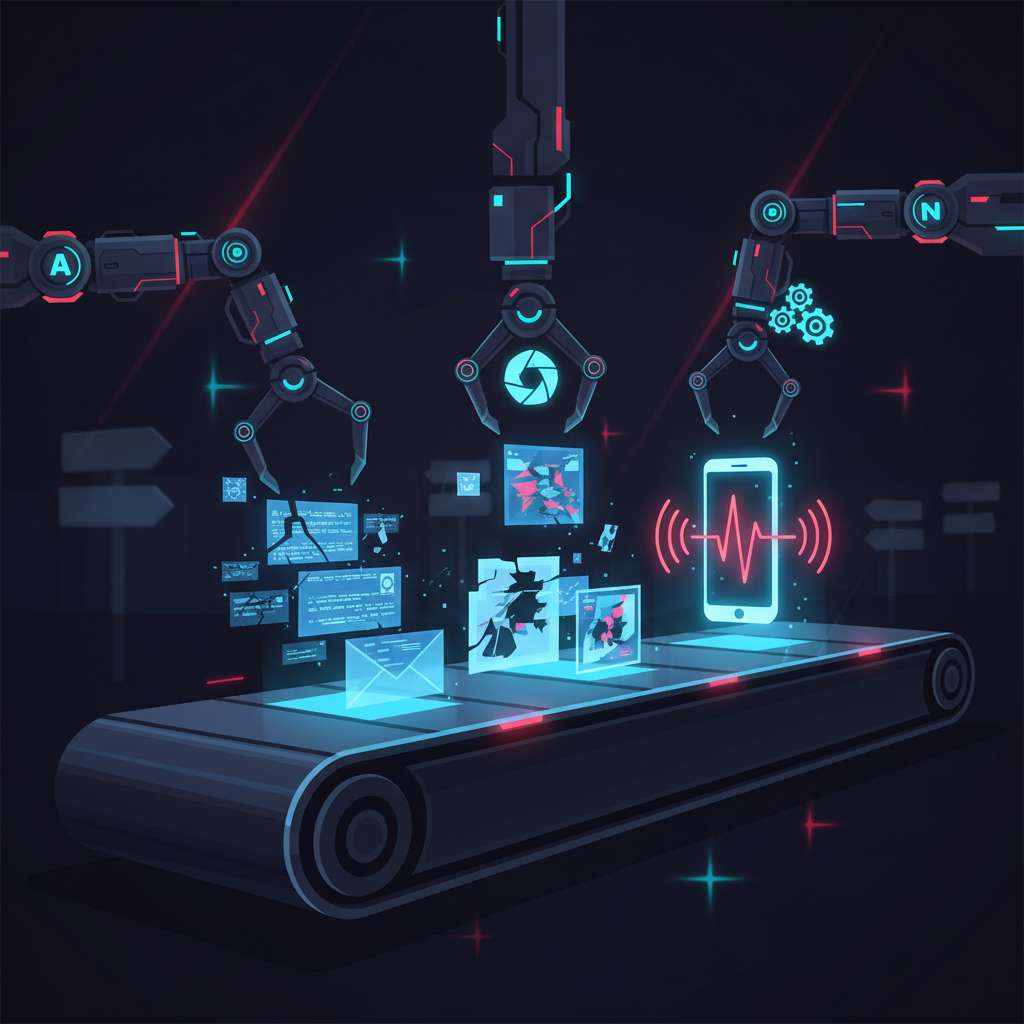

Trend Micro’s latest research reconstructs an end-to-end “scam assembly line” where threat actors chain large language model (LLM) lure generation, synthetic voice/video, and low-code automation (n8n) to spin up convincing phishing and merchandise scams at scale with minimal effort. The demo highlights modular pipelines that can swap prompts, assets, and delivery channels while hiding behind disposable infrastructure and cloud services (Trend Micro, Nov 18, 2025).

Intrusion Flow

- Lure generation and refinement

- Actors use LLMs to draft spear-phishing or promo copy and iteratively “jailbreak” guardrails through multi-turn prompt patterns, improving persuasion and filter evasion (Microsoft Security on Crescendo jailbreaks; OpenAI/Microsoft disruption of state-linked misuse).

- Synthetic assets

- Deepfake voice and avatar video are used to front fake product ads, support calls, or romance/investment pitches, with measurable rise in AI-voice fraud calls reported in 2024-2025 (Hiya Q4 2024 call threat report release; FTC consumer alert and challenge outcomes on voice-clone detection/watermarking).

- Automation pipeline (orchestrator)

- Low-code platforms like n8n can chain text, image, TTS, and video services into agentic workflows that batch-produce lures and publish them across email, web, and social channels (Trend Micro lab pipeline).

- Delivery and infrastructure

- Distribution leans on clusters of newly registered or short-lived domains and lookalikes; domain age and deceptive links remain top phishing indicators in enterprise telemetry (Cloudflare phishing report). Short-lived domains are common-Spamhaus observed ~89% of studied phishing domains active for <48 hours, reflecting a churn model that evades daily snapshots and takedown latency (Spamhaus passive DNS research; DarkDNS paper on visibility gaps for transient domains). Some campaigns also rent fast-flux and bulletproof hosting to rotate IPs and extend uptime (Bitsight fast-flux investigation).

- Monetization and follow-through

- Voice-cloned robocalls and impersonation continue despite new U.S. prohibitions on AI-voice robocalls under the TCPA, raising both criminal and regulatory exposure for operators and facilitators (AP coverage of FCC ruling).

Key Artifacts to Pull

- Email and messaging

- Full RFC822 source with Received chain, DKIM/DMARC/SPF results, display-name vs. header anomalies, and any URL shorteners or tracking parameters aligned to the same infrastructure cluster (Cloudflare phishing indicators include domain age and deceptive links).

- Web infrastructure

- Server and CDN logs for landing pages; TLS cert details; hosting ASNs; shared analytics IDs; favicon/screenshot hashes for kit clustering; newly registered domain (NRD) metadata and WHOIS; passive DNS pivoting across A/AAAA/CNAME/NS records to reveal rotating IP sets and lookalikes (Spamhaus: short duty cycle on phishing domains; DarkDNS transient-domain visibility; Bitsight fast-flux characteristics).

- Synthetic media

- Original audio/video files when available; obtain transcoding logs from comms platforms; evaluate for liveness/clone indicators per emerging techniques (e.g., real-time clone detection and watermarked provenance described in FTC challenge winners) (FTC consumer alert).

- Automation backends (self-hosted n8n or similar)

- If your environment runs n8n, collect workflow JSON, execution logs, credentials vault exports, environment variables, and API audit logs. The public REST API exposes workflow administration; disable if unused and scope API keys tightly (n8n API docs; disable public API guidance).

- Validate patch levels against recent n8n CVEs that could aid takeover or phishing distribution, such as open-redirect in login (<1.98.0), DoS via binary-data endpoint (<1.99.0), stored XSS in LangChain Chat Trigger (<1.107.0), symlink traversal in file node (<1.106.0), and RCE via Git node pre-commit (<1.113.0) (NVD CVE-2025-49592; CVE-2025-49595; CVE-2025-58177; CVE-2025-57749; CVE-2025-62726).

Detection Notes

- Cluster the infrastructure, not the copy

- Prioritize hunts for NRDs and lookalikes seen in email and web logs within their first 72 hours; many phishing domains die in under two days, so velocity detection matters (Spamhaus 48-hour activity finding).

- Example Splunk: clicks to NRDs (domain age < 7 days) from mail links

index=proxy OR index=web (http_status=200 OR http_status=302) | eval host=coalesce(cs_host, dest_host, uri_host) | lookup whois_domains domain as host OUTPUT domain_age_days | where domain_age_days <= 7 | stats count dc(src_ip) dc(user) values(referer) by host | sort - count - Example pDNS pivot: fast-flux / low-TTL clusters

Use ASN and nameserver co-occurrence to fingerprint rotating hosting and kit reuse (Bitsight fast-flux traits).

-- Pseudo-SQL over PDNS SELECT qname, count(DISTINCT rrdata) AS ips, min(ttl) AS min_ttl, count(DISTINCT resolver) AS resolvers FROM pdns WHERE first_seen > now() - interval '48 hours' GROUP BY qname HAVING ips >= 10 OR min_ttl <= 120 ORDER BY ips DESC; - Voice/phone fraud signals

- Blend call intelligence with IT telemetry (voice events + subsequent login attempts or payment flows); vendors observed rising deepfake call losses in late 2024 (Hiya report summary). U.S. enforcement has made AI-voice robocalls illegal, aiding attribution and disruption (AP on FCC action).

- Model/agent risk in your stack

- Treat AI assistants as part of the attack surface; indirect prompt injection remains a practical vector against summarizers and mail triage tools (Mozilla 0Din finding on Gemini Workspace summarizer manipulation; CISA multi-nation guidance for using AI securely).

Response Guidance

- Triage fast, contain faster

- Quarantine endpoints that interacted with suspected NRDs/lookalikes; block and sinkhole the domain cluster and any shared IPs/NS immediately to beat the 48-hour churn (Spamhaus short-lived domain analysis).

- Eradicate pipeline footholds

- If n8n (or similar) is present internally, rotate API keys, disable the public API where unused, and patch to remediate recent CVEs; export workflow JSON for IR timelines and disable active lures (n8n API; disable public API; NVD/GitLab advisories).

- Takedown and legal levers

- Submit abuse to registrars/hosts with evidence of brand impersonation, fast-flux indicators, and victim impact. For AI-voice robocalls, cite the FCC prohibition when engaging telecom partners and state AGs to expedite blocks and civil actions (AP on FCC ban).

- Harden controls for the next wave

- Enforce NRD/“confusable” domain blocks or holds at the gateway; several providers support lookalike and scam categories to preempt clicks (Cloudflare brand impersonation defenses; Cloudflare Gateway “Scam” category).

- Sandbox or disable AI auto-summarization on untrusted content; adopt allow-lists and content provenance where available (CISA secure AI use).

- Train helpdesk/finance to challenge-response on voice instructions; FTC guidance highlights real-time liveness/clone detection and watermarking concepts that can be integrated into call flows (FTC consumer alert).

Takeaways

- Hunt infrastructure clusters: NRDs, lookalikes, and fast-flux indicators are your highest-leverage pivots in the first 48 hours (Spamhaus; Bitsight).

- Treat low-code orchestrators as Tier-1 assets: lock down n8n APIs, rotate credentials, and patch recent CVEs before they become distribution points (n8n docs; NVD/GitLab).

- Don’t trust “smart” summarizers by default: indirect prompt injection against AI assistants is practical; sandbox or disable on untrusted mail/web (Gemini finding via 0Din; CISA).

- Use telecom and legal levers early for voice-clone fraud; the policy environment now supports faster blocking of AI-voice robocalls (AP on FCC rule).